Emerging technologies affect many aspects of our lives. Driverless cars are no different, as Dr. Lars Kunze recently demonstrated in a talk titled “Driven by AI” to community members at the Upper Norwood Library Hub in South London. The talk is part of a series that aims to involve the community in decision-making processes involving Artificial Intelligence (AI).

In his talk, Dr. Kunze elaborated on the motivations behind developing driverless cars, how they are built, and how they could responsibly be integrated into society. In this post, we’ll summarise the content of the talk, and share some highlights from the community discussion:

Why do efforts around driverless cars exist?

Safety. The World Health Organisation (WHO) estimates that 1.3 million fatalities take place every year due to road traffic incidents. 5% of these fatalities are due to human error. It is hypothesized that automating the task of driving can reduce the human error element. As such, many nations are considering the use of driverless cars to help them reach their targets to reduce road fatalities (e.g., the EU has a target of reaching zero road fatalities by 2050).

Increased Mobility. Another benefit of driverless cars is increasing mobility and accessibility for those who cannot drive themselves such as elderly or disabled people, or populations in rural areas.

Sustainability. Driverless cars can drive more efficiently than human drivers and have the potential of combating climate change through reducing fuel consumption, traffic congestions, and carbon emissions.

Economy and Job Market. Opening up the driverless car market can also lead to the creation of jobs along with other economic benefits.

How are driverless cars developed?

Driverless cars are equipped with software and hardware that enables them to take control of driving tasks. The main tasks of driverless cars can be categorised into: (1) sensing the environment using sensing technologies such as cameras, LiDAR, and RADAR; (2) constantly processing information from these sensors; (3) making predictions about trajectories and other agents’ movements such as other cars and pedestrians; (4) making decisions on what to do next (e.g., accelerating, breaking, changing directions); and finally (5) acting and performing these decisions.

To successfully take control of the driving task, driverless cars must be able to answer the following questions, which also represent much of the research being done in this field:

Where am I? This involves the car identifying where it is located and building a map of the environment surrounding it.

What surrounds me? This involves identifying and perceiving what surrounds the car to differentiate between drivable surfaces and other objects whether they are statis or dynamic.

What should I do? This involves acting on the given information and performing the appropriate actions.

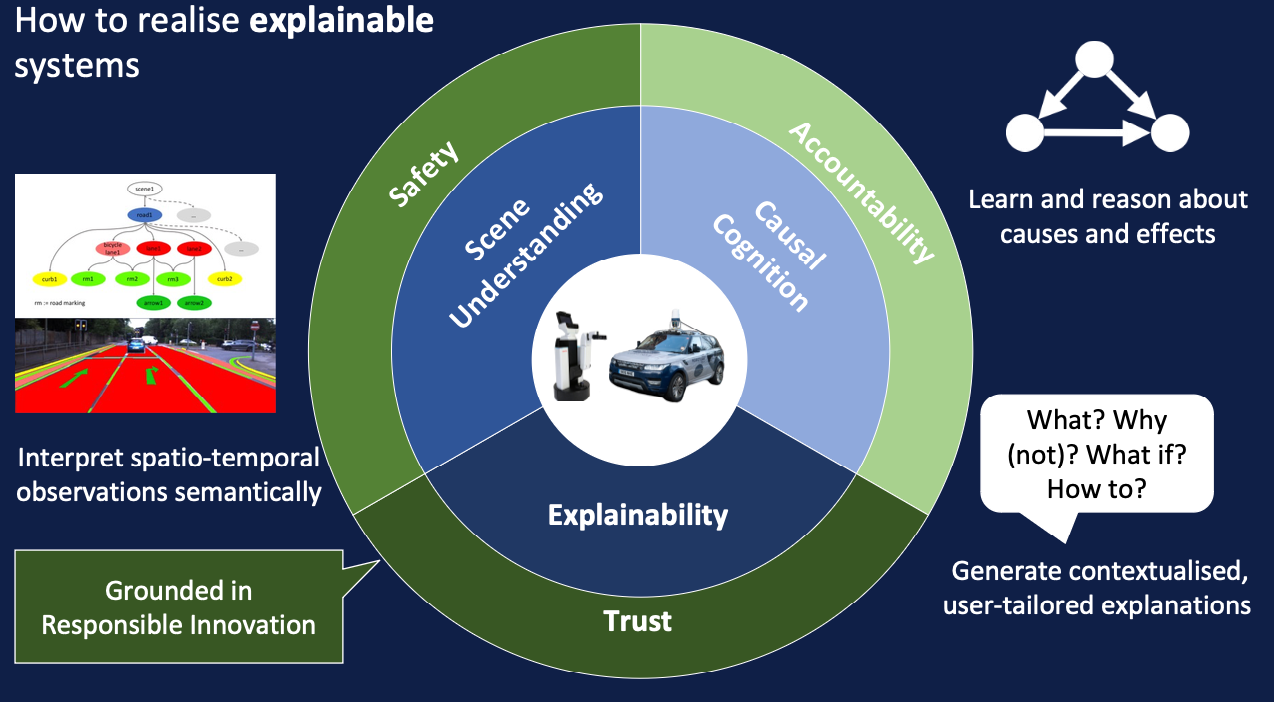

While one of the motivations of having driverless cars is to eliminate human error, it is important to note that the development of these systems cannot happen in isolation from people. In fact, their success depends on how well they are integrated into society as a whole. This brings themes such as accountability, trust, and transparency into effect, along with addressing these themes for different stakeholder groups (e.g., users, developers, regulators). For example, driverless cars produce many video recordings of their surroundings, which introduces important privacy implications. What happens to this data and who has access to it? Who is accountable?

One way towards responsible development of driverless cars is to clarify who is accountable when an accident happens. A key element in understanding this is explainability. Good explainable systems can serve different stakeholder levels, depending on the case and what is needed to be explained. Examples include explaining why a driverless car made a particular decision, or why it didn’t. One way to generate explanations for driverless cars is to interpret the static and dynamic features of a scene into natural language. There are many other techniques involved and developing explainable and transparent systems requires appreciation of both the complexity of the system itself, and of the task of translating this complexity into information that stimulates intuition about how and why the system behaves the way it does.

Even when looking at the benefits mentioned earlier in this post, more questions arise about how these benefits are realised:

Safety. How safe is safe enough? Should driverless cars be as safe as human drivers or safer? Who is accountable when there is an accident?

Mobility. Who will have access to this new mode of mobility? Will it only be affordable to rich populations?

Sustainability. What is the cost against the sustainability benefits? How can this be measured?

These implications make it essential to involve the broader community in the process of decision making. In our community lab portion of the talk, the audience were prompted to respond to 3 questions:

- What would you like to see happen in the future of driverless cars?

- What can we do as a community to respond to developments in this area?

- What do you want your government and other bodies/institutions to put in place to support safe and inclusive development of driverless cars?

In discussing these questions, the audience reflected on a shared vision for driverless cars and highlighted potential risks they would like to avoid. Some examples of topics discussed included preferences around private car ownership and public transportation, concerns around privacy, concerns around the impact of driverless cars on law enforcement, and ensuring that driverless cars are able to safely drive across different road scenarios and contexts.

The advantage of the community lab lies in emphasising the role of the community, as an essential stakeholder group, in employing their contextual expertise and influence decision-making around these technologies to serve the public’s best interest.

Blogpost by Jumana Baghabrah